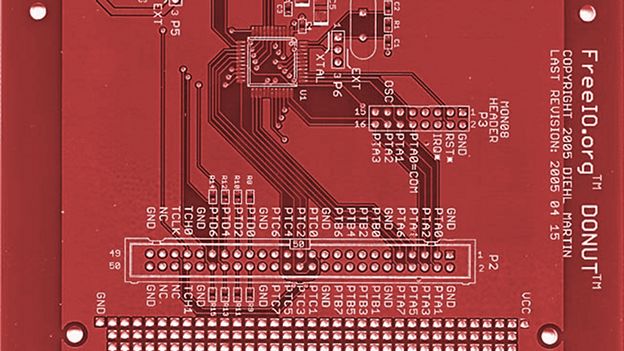

SERB Robot, CC photo by flickr user oomlout

FreeIO.org is currently running a poll to determine what sort of free hardware project the community would most like to see developed. At present the poll is leaning heavily towards robots. So I thought it would be worthwhile to do a quick survey of existing free/open hardware robot projects to see what there is to work with and improve on. There are a lot of FLOSS (Free/Libre Open Source Software) robotics projects out there too but this article will focus on hardware projects that are under free hardware licenses. See the FreeIO.org “about page” to learn more about the concepts of free / open hardware.

I’ve attempted to list the projects roughly in chronological order by the project’s creation date. To qualify for this list, a project needs several attributes: 1) it must be a complete mobile robot, not just part of a robot such as a manipulator arm 2) the hardware design documents (e.g. CAD files, schematics, etc) must be available under a free license (i.e. a license that protects the user’s basic freedoms – licenses with commercial-use restrictions are NOT free/open licenses, 3) at least one working robot must have been developed and demonstrated. Projects that are in the planning stages didn’t make the list as we’d like to see well-proven designs that have been well-tested in the real world.

Read on for the full list of free/open hardware robot designs!